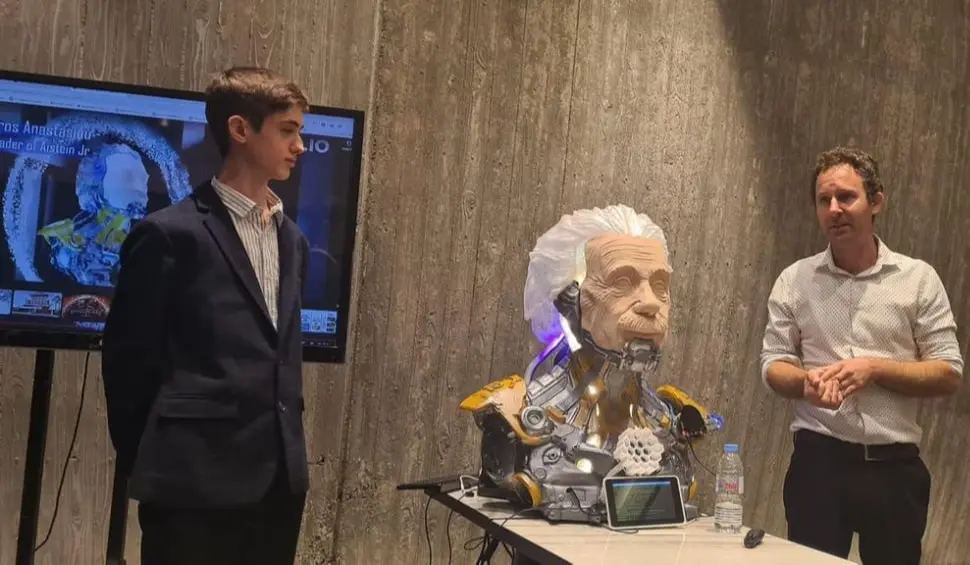

Early Beginnings with Ainstein Junior

My journey began with Ainstein Junior, a project I developed to revolutionize education through AI innovation. Designed to enhance the learning experience by providing real-time feedback and interaction, Ainstein Junior set the foundation for my later work in chatbot development. This project underscored the importance of low latency in user experience and the transformative potential of AI in everyday interactions.

Pushing the Boundaries of Response Time

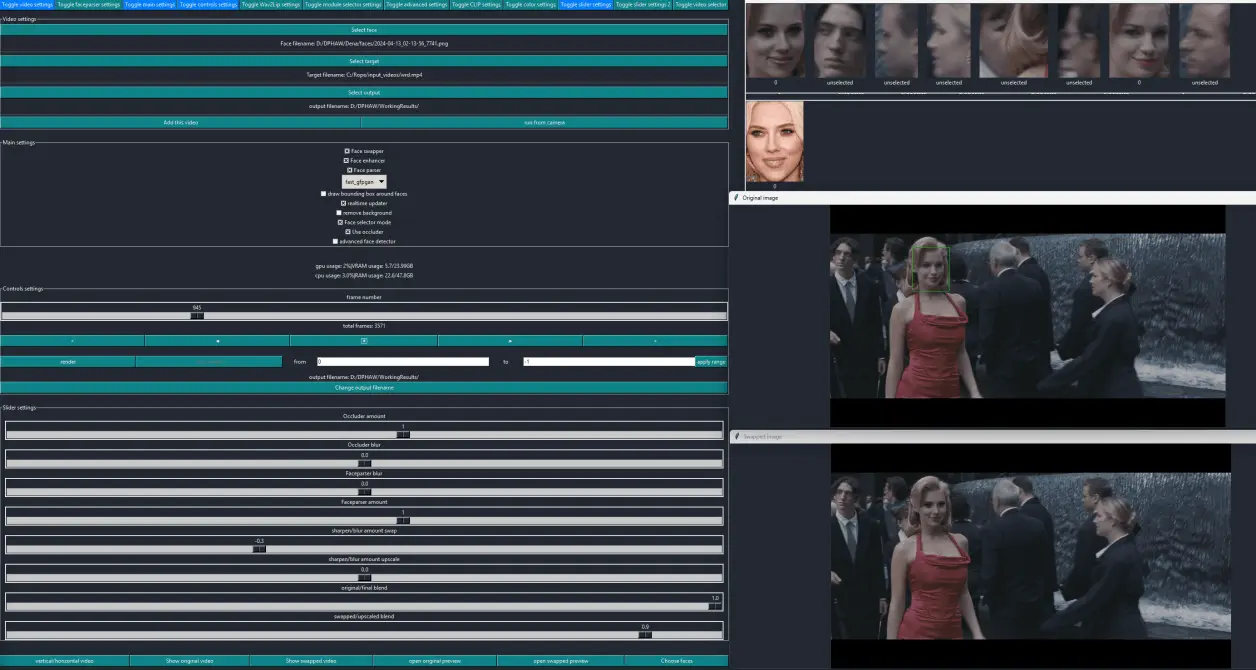

Driven by the goal of reducing response times from several seconds to mere fractions of a second, I focused on iterative improvements to achieve this benchmark. Inspired by the latest developments in AI by large companies and the open-source community, I continuously refined my chatbots. Each iteration aimed at shaving off milliseconds from the response time, achieving near-instantaneous interactions that mimic natural human conversation. This relentless pursuit of speed involved optimizing algorithms, enhancing data processing techniques, and leveraging the most recent advancements in AI models and APIs.

Key Innovations and Technologies

1. Streaming Responses: Implementing streaming capabilities in my chatbots ensures that responses begin to transmit as soon as they are partially ready, rather than waiting for full generation. This reduces perceived latency and enhances the fluidity of conversations.

2. Advanced Models and APIs: By utilizing the latest AI models and efficient transcription services, I optimized my chatbots for both speed and accuracy. These models process more tokens per second, allowing for faster and more contextually accurate responses.

3.Low-Latency Architecture: Inspired by research into high-performance, low-latency architectures, I incorporated these principles into my chatbots. This ensures they can handle a high volume of interactions efficiently, making them suitable for various applications from customer service to interactive education.